A/B Testing

In content marketing, A/B testing, also known as split testing, is a systematic approach that compares two versions of a webpage to determine which one performs better in achieving predefined goals.

In content marketing, A/B testing, also known as split testing, is a systematic approach that compares two versions of a webpage to determine which one performs better in achieving predefined goals.

Whether it’s tweaking the color scheme, refining call-to-action buttons, or experimenting with content placement, A/B testing empowers businesses to make data-driven decisions by providing insights into user preferences and behavior.

In this lesson, we provide an overview of A/B testing and its impact on your content management and digital strategies.

Introduction to A/B Testing

Understanding A/B Testing

A/B testing is a powerful tool that you can use to optimize your marketing strategies and improve engagement and conversions.

In this section, we look at the basics of A/B testing and how it can benefit content writers in their quest to create compelling and effective content.

A/B testing, also known as split testing, is a method of comparing two versions of a webpage, email, or other marketing materials to see which one performs better.

By randomly showing different versions to different segments of your audience, you can determine which version is more effective in achieving your goals, whether it’s increasing click-through rates, conversions, or engagement.

For content writers, A/B testing can provide valuable insights into what resonates with your audience and what doesn’t. By testing different headlines, calls to action, images, or even entire pieces of content, you can identify which elements are most effective in driving engagement and conversions.

When conducting A/B tests, it’s important to have a clear hypothesis and goal in mind. What are you trying to achieve with your test? Are you looking to increase click-through rates, improve conversion rates, or boost engagement? By defining your goals upfront, you can better measure the success of your tests and make informed decisions based on the results.

In addition to setting clear goals, it’s also important to track and analyze the results of your A/B tests. By monitoring key metrics such as click-through rates, conversion rates, and engagement metrics, you can identify trends and patterns that can help you refine your content marketing strategies and improve performance over time.

Overall, A/B testing is a valuable tool for content writers looking to optimize their marketing strategies and boost engagement and conversions. By understanding the basics of A/B testing and implementing best practices, you will create more effective and engaging content that resonates with your audience.

Importance of A/B Testing in Content Marketing

As a content writer, understanding the importance of A/B testing in content marketing is crucial for boosting engagement and conversions. A/B testing involves comparing two versions of a piece of content to see which one performs better in terms of achieving the desired outcome, such as clicks, conversions, or engagement.

One of the main reasons why A/B testing is essential in content marketing is that it provides valuable insights into what resonates with your audience. By testing different elements such as headlines, images, calls to action, or even the overall layout of a piece of content, you can determine what drives the most engagement and conversions. This data-driven approach allows you to make informed decisions about your content strategy, ultimately leading to better results.

Another key benefit of A/B testing in content marketing is that it helps you optimize your content for maximum impact. By continuously testing and analyzing the performance of different variations, you can refine your content to ensure it is as effective as possible. This iterative process allows you to constantly improve your content strategy and stay ahead of the competition.

Furthermore, A/B testing can also help you save time and resources by focusing on what works best for your audience. Instead of relying on guesswork or gut feelings, A/B testing provides concrete data that can guide your content decisions. This data-driven approach can lead to higher ROI and better overall performance for your content marketing efforts.

In conclusion, A/B testing is a powerful tool for content writers looking to boost engagement and conversions. By leveraging the insights gained from testing different variations of content, you can optimize your strategy, save time and resources, and ultimately drive better results for your content marketing efforts.

Setting Up A/B Tests

Defining Goals for A/B Tests

Before diving into the world of A/B testing, it is crucial to clearly define your goals for these experiments.

A/B testing is a powerful tool that can help you optimize your content marketing strategies and increase engagement and conversions. However, without clear goals in mind, it can be easy to get lost in the sea of data and lose sight of what you are trying to achieve.

When defining goals for A/B tests, consider what you want to accomplish with your content:

- Are you looking to increase click-through rates on a specific call-to-action?

- Do you want to improve the conversion rate on a landing page?

- Are you trying to boost engagement with your blog posts?

By clearly defining these goals, you can create A/B tests that are focused and targeted towards achieving specific outcomes.

In addition to setting clear goals, you should also consider the key metrics that you will use to measure the success of your A/B tests. Whether it is tracking click-through rates, conversion rates, or engagement metrics, having a solid understanding of the data that will be used to evaluate the performance of the tests is essential.

By defining goals and metrics for A/B tests, you will ensure that your experiments are purposeful and well-executed. This will not only help you make data-driven decisions about your content marketing strategies but also lead to better engagement and conversions in the long run.

So, before starting any A/B test, take the time to clearly define your goals and metrics to set yourself up for success.

Selecting Variables to Test

When it comes to A/B testing for content marketing strategies, selecting the right variables to test is crucial for achieving meaningful results. It is essential to identify key elements that can have a significant impact on engagement and conversions.

Here are some tips for selecting variables to test in your A/B experiments:

1. Headlines: One of the most important elements to test in your content is the headline. A compelling headline can make a big difference in how many people click on your content and engage with it. Try testing different variations of headlines to see which one resonates best with your audience. (Tip: Use a headline generation tool or even an AI tool like ChatGPT to help you create compelling headlines).

2. Call-to-Action (CTA): The call-to-action is another critical variable to test in your content. Whether you want readers to sign up for a newsletter, download a resource, or make a purchase, the CTA should be clear and compelling. Test different variations of CTAs to see which one drives the most conversions.

3. Visuals: Images and videos can play a significant role in capturing the attention of your audience. Test different visuals to see which ones drive the most engagement and conversions. Pay attention to factors such as color, size, placement, and relevance to your content.

4. Content Length: The length of your content can also impact engagement and conversions. Test different lengths of content to see which performs best with your audience. Some readers may prefer shorter, more concise content, while others may prefer longer, more in-depth pieces.

5. Tone and Language: The tone and language used in your content can also affect how it resonates with your audience. Test different tones (e.g., formal vs. informal) and language styles to see which one connects best with your readers.

By selecting and testing these variables in your A/B experiments, you can gain valuable insights into what resonates best with your audience and optimize your content marketing strategies for maximum engagement and conversions.

Remember to track and analyze the results of your tests carefully to make data-driven decisions for future content creation.

Creating A/B Testing Hypotheses

Formulating Hypotheses for Content Experiments

One of the key steps in conducting successful A/B tests is formulating hypotheses for content experiments.

A hypothesis is a statement that predicts the outcome of an experiment. In the context of content marketing, a hypothesis should be based on a specific goal or objective that you want to achieve with your content.

For example, if your goal is to increase the click-through rate on a call-to-action button, your hypothesis could be that changing the color of the button to red will lead to a higher click-through rate compared to the current color.

When formulating hypotheses for content experiments, it’s important to be clear and specific. Your hypothesis should clearly state the changes you plan to make and the expected outcome. Additionally, your hypothesis should be testable, meaning that you should be able to measure the impact of the changes you make.

To increase the chances of success with your content experiments, it’s also important to base your hypotheses on data and insights. Take the time to analyze your existing content performance metrics and identify areas where improvements can be made. Use this data to inform your hypotheses and make informed decisions about the changes you want to test.

By formulating well-crafted hypotheses for your content experiments, you can set clear goals, measure the impact of your changes, and optimize your content marketing strategies for maximum engagement and conversions.

Remember, A/B testing is a powerful tool that can help you continuously improve and refine your content to achieve your marketing goals.

Understanding Statistical Significance in A/B Testing

Understanding statistical significance in A/B testing is crucial for effectively boosting engagement and conversions in your content marketing strategies.

A/B testing involves comparing two versions of a webpage, email, or any other marketing asset to determine which one performs better in terms of a specific goal, such as click-through rates or conversion rates.

Statistical significance is the measure of confidence that the difference in performance between the two versions is not due to random chance.

In other words, it helps you determine whether the observed difference in performance is statistically significant or just a fluke.

Determining Statistical Significance in A/B Testing: P-Value

To determine statistical significance in A/B testing, you need to calculate the p-value, which is the probability of obtaining results as extreme as the ones observed if the null hypothesis (that there is no difference between the two versions) is true.

Typically, a p-value of less than 0.05 is considered statistically significant.

To calculate the p-value in A/B testing, you generally follow a process that assesses the probability of obtaining test results at least as extreme as the ones observed during the test, under the assumption that there are no differences between the test groups (null hypothesis).

Here’s a simplified overview of the steps involved:

1. Define the Null and Alternative Hypotheses: The null hypothesis (H0) usually states that there is no effect or no difference between the groups, while the alternative hypothesis (H1) suggests that there is a difference.

2. Choose a Significance Level (a): Commonly, a significance level of 0.05 is used, indicating a 5% risk of concluding that a difference exists when there is no actual difference.

3. Collect Data: Conduct your A/B test and collect data for each group. This includes the number of trials and the number of successes (e.g., conversions).

4. Calculate Test Statistic: Depending on the data type and test design, calculate a test statistic (e.g., z-score for proportions).

5. Determine the P-Value: The p-value is calculated based on the test statistic. It represents the probability of observing results at least as extreme as those observed if the null hypothesis were true.

Example

Suppose you’re testing two versions of a webpage (A and B) to see which one results in more conversions. Version A had 200 visitors and 40 conversions, while version B had 200 visitors and 50 conversions.

- Null hypothesis (H0): Conversion rate of A = Conversion rate of B

- Alternative hypothesis (H1): Conversion rate of A ? Conversion rate of B

- Significance level (a) = 0.05

Assuming the conversion rates follow a normal distribution, you could calculate the z-score for the difference in conversion rates and then find the corresponding p-value from a standard normal distribution table or using statistical software.

If the p-value is less than the significance level (0.05 in this case), you reject the null hypothesis, concluding there’s a statistically significant difference between versions A and B.

This example is a simplified explanation. In practice, calculating the p-value involves more detailed statistical analysis, and tools or software like Python’s SciPy library, R, or specialized A/B testing calculators are often used to automate this process.

It’s important to keep in mind that statistical significance does not necessarily mean practical significance. Just because a difference is statistically significant doesn’t always mean it’s meaningful or worth implementing in your content marketing strategy. That’s why it’s important to consider other factors, such as the size of the effect and the practical implications of the results.

By understanding statistical significance in A/B testing, you can make data-driven decisions to optimize your content marketing strategies and improve engagement and conversions.

So, next time you’re running an A/B test, make sure to pay attention to statistical significance to ensure that your results are both reliable and actionable.

Implementing A/B Tests

Tools for A/B Testing in Content Marketing

A/B testing is a crucial tool for determining the effectiveness of various strategies and tactics. By testing different versions of content and analyzing the results, you will gain valuable insights into what resonates with your target audience and drives engagement and conversions.

There are a variety of tools available for conducting A/B tests in your content marketing efforts. These tools range from simple, free options to more advanced, paid platforms that offer a range of features and capabilities.

One popular tool for A/B testing in content marketing is Optimizely. This platform offers a range of features, including multivariate testing, personalization, and targeting capabilities. Optimizely is a paid platform, but its advanced features make it a popular choice for content writers looking to take their A/B testing efforts to the next level.

If you are looking for a more budget-friendly option, tools like VWO and Unbounce offer A/B testing capabilities at a lower price point. These tools are user-friendly and offer a range of features for testing and optimizing content for engagement and conversions.

Regardless of the tool chosen, A/B testing is a valuable practice for improving your content strategies. By testing different versions of content and analyzing the results, you will gain valuable insights into what resonates with your audience and drive better results for your content marketing efforts.

More info: WordPress A/B Testing Plugins

Best Practices for Running A/B Tests

Running successful A/B tests is crucial for boosting engagement and conversions. By following best practices, you will ensure that your tests are accurate, reliable, and provide valuable insights for optimizing your content marketing strategies.

One key best practice for running A/B tests is to clearly define your goals and hypotheses before conducting the test. This will help you stay focused on what you are trying to achieve and ensure that your test results are meaningful.

It is also important to have a large enough sample size to ensure that your results are statistically significant. Running tests with too small of a sample size can lead to unreliable results and false conclusions.

Another best practice is to only test one variable at a time. This will help you accurately determine the impact of each change you make to your content.

By testing multiple variables at once, you may not be able to isolate the effects of individual changes, making it difficult to draw clear conclusions from your test results.

Additionally, it is important to set up your tests properly to ensure that your results are accurate. This includes using reliable testing tools, implementing proper tracking mechanisms, and ensuring that your test variations are presented randomly to your audience.

By following these best practices for running A/B tests, you can effectively optimize your content marketing strategies and drive better results. With accurate and reliable test results, you can make data-driven decisions that lead to increased engagement, conversions, and overall success in your content marketing efforts.

Analyzing A/B Test Results

Interpreting Data from A/B Tests

Understanding how to interpret data from A/B tests is crucial for improving the effectiveness of your content marketing strategies. A/B testing allows you to compare two versions of a piece of content to determine which one performs better in terms of engagement and conversions.

However, simply running an A/B test is not enough – you also need to know how to analyze and interpret the data to draw meaningful insights.

One key aspect of interpreting data from A/B tests is statistical significance. This refers to the likelihood that the differences observed in the test results are not due to random chance.

In order to determine statistical significance, you can use statistical tools such as t-tests or chi-squared tests. If the results are statistically significant, you can be more confident in the conclusions drawn from the test.

Another important factor to consider when interpreting A/B test data is the practical significance of the results. This involves looking at the actual impact of the differences observed in the test results on your content marketing goals.

For example, even if a change in a headline leads to a statistically significant increase in click-through rates, if the difference is only a few percentage points, it may not be practical to implement that change.

In addition to statistical and practical significance, it is also important to consider the context in which the A/B test was conducted. Factors such as the sample size, test duration, and external variables can all impact the results of the test.

By taking these factors into account when interpreting A/B test data, you can ensure that you are making informed decisions to optimize your content marketing strategies.

In conclusion, interpreting data from A/B tests is a crucial skill to boost engagement and conversions. By understanding statistical significance, practical significance, and the context of the test, you can draw meaningful insights from your A/B test results and make data-driven decisions to improve your content marketing strategies.

Making Data-Driven Decisions for Content Optimization

Making informed decisions is crucial for success. With the abundance of data available at our fingertips, you have the power to optimize your content for maximum engagement and conversions.

This section explores the process of making data-driven decisions for content optimization through A/B testing.

A/B testing is a powerful tool that allows content writers to experiment with different variables and determine which version performs better. By testing variations of headlines, calls-to-action, images, and more, writers can gain valuable insights into what resonates with their audience.

Before conducting A/B tests, it is important to define clear objectives and key performance indicators (KPIs). By setting specific goals, you can measure the success of your tests and make informed decisions based on the results.

Once the tests are set up, you must analyze the data to identify patterns and trends. By tracking metrics such as click-through rates, bounce rates, and conversions, you can determine which variations are most effective in driving engagement and conversions.

From there, you can use this data to make informed decisions about your content optimization strategies. Whether it’s tweaking headlines, adjusting calls-to-action, or refining messaging, A/B testing empowers you to continuously improve your content based on real-time data.

By embracing data-driven decision-making, your content writers can take their work to the next level and achieve better results for maximum engagement and conversions.

Case Studies in A/B Testing

Successful A/B Testing Examples in Content Marketing

By testing different variations of content and analyzing the results, you can gain valuable insights into what resonates with your audience and drives action.

In this section, we explore some successful A/B testing examples in content marketing that have led to significant improvements in engagement and conversions.

One example of a successful A/B test in content marketing is the use of different headlines for a blog post. By testing two different headlines with the same content, you can determine which headline is more effective at capturing the audience’s attention and driving clicks.

For example, a travel website may test headlines like “Top 10 Destinations to Visit This Summer” versus “Escape to Paradise: The Best Summer Getaways.” By analyzing the click-through rates for each headline, the content writer can identify the most effective headline for driving traffic to the blog post.

Another successful A/B testing example in content marketing is testing different call-to-action (CTA) buttons on a landing page. By testing variations in color, size, and wording of the CTA button, content writers or web designers can determine which version is most effective at encouraging visitors to take action, such as signing up for a newsletter or making a purchase.

For example, an e-commerce website may test a green “Shop Now” button versus a red “Buy Now” button to see which one generates more conversions.

By testing different elements of your content and analyzing the results, you can optimize your strategies and drive better results for your brand.

Lessons Learned from A/B Testing Experiments

A/B testing experiments are a powerful tool for optimizing content marketing strategies and boost engagement and conversions. Through rigorous testing and analysis, valuable lessons can be learned that can significantly impact the success of your content.

One key lesson learned from A/B testing experiments is the importance of testing one variable at a time. It can be tempting to make multiple changes at once to see immediate results, but this can lead to confusion about which change actually had an impact on performance. By testing one variable at a time, you can accurately measure the impact of each change and make informed decisions based on data.

Another valuable lesson is the significance of setting clear goals before conducting A/B tests. Whether your goal is to increase click-through rates, reduce bounce rates, or improve conversion rates, having a clear objective in mind will help guide your testing strategy and ensure that you are measuring the right metrics.

Additionally, A/B testing experiments have taught many businesses the importance of patience and consistency in applying digital marketing strategies. Results may not be immediate, and it is essential to give your tests enough time to gather meaningful data. By consistently testing and refining your content, you can identify trends and patterns that will ultimately lead to more successful campaigns.

In conclusion, A/B testing experiments offer valuable insights that can transform your content marketing strategies. By testing one variable at a time, setting clear goals, and practicing patience and consistency, you can optimize their content for maximum engagement and conversions. Embracing the lessons learned from A/B testing experiments is essential for staying ahead in the competitive world of content marketing.

Advanced Strategies for A/B Testing

Multivariate Testing in Content Marketing

Multivariate testing in content marketing allows you to test multiple variables simultaneously to determine the most effective combinations for boosting engagement and conversions.

Unlike traditional A/B testing, which only compares two variations, multivariate testing allows for the testing of several variables at once, providing more in-depth insights into what resonates with the target audience.

You can use multivariate testing to experiment with different headlines, images, calls to action, and other elements to see which combination drives the desired results. By testing multiple variables at once, you can quickly identify the most impactful elements and optimize your content accordingly.

One key benefit of multivariate testing is its ability to uncover interactions between different variables. For example, a certain headline may perform well on its own, but when paired with a specific image, the engagement and conversion rates may increase significantly. By testing these combinations simultaneously, you can fine-tune your content to create the most effective messaging for your audience.

To conduct a multivariate test, first identify the variables you want to test and create different combinations for each. You can then use a testing tool to measure the performance of each variation and determine which combination yields the best results.

Personalization and Segmentation in A/B Testing

Personalization and segmentation are crucial components of successful A/B testing. By tailoring your content to specific audience segments, you can enhance engagement and drive conversions.

Personalization involves customizing content based on individual preferences, behavior, and demographics. This can include using a reader’s name, recommending personalized products or services, or delivering content at the right time and through the right channel.

By personalizing your content, you can create a more meaningful and relevant experience for your audience, increasing the likelihood of them taking the desired action.

Segmentation, on the other hand, involves grouping your audience into distinct segments based on shared characteristics or behaviors. This allows you to target your content to specific groups of people, making it more relevant and effective.

For example, you could segment your audience based on their location, age, interests, or previous interactions with your content.

When conducting A/B tests, it’s important to consider personalization and segmentation to maximize the impact of your content. By testing different variations of personalized and segmented content, you can identify which strategies resonate best with your audience and drive the most conversions.

To effectively implement personalization and segmentation in your A/B testing strategy, start by defining your audience segments and personalization tactics. Use data and analytics to understand your audience’s preferences and behaviors, and tailor your content accordingly.

Test different variations of personalized and segmented content to see what works best, and iterate on your findings to continuously improve your content performance.

By incorporating personalization and segmentation into your A/B testing for content marketing strategies, you can create more engaging and effective content that drives conversions and delivers results for your business.

Optimizing Content for Engagement and Conversions

Applying A/B Testing Results to Improve Engagement Metrics

Once you have conducted your A/B tests and gathered data on how different variations of your content perform, it is crucial to analyze the results and draw actionable insights.

Look for patterns and trends in the data to identify what elements are resonating with your audience and driving the most engagement. This could include things like headlines, images, call-to-action buttons, or even the overall tone and style of your content.

Once you have identified what is working well, it is time to make data-driven decisions to optimize your content. Implement the winning variations from your A/B tests across your content to see if they have a positive impact on engagement metrics.

Keep in mind that it is important to continue testing and refining your content to ensure that you are always improving and staying ahead of the competition.

Another important aspect of applying A/B testing results is to monitor your engagement metrics closely and track the impact of any changes you make.

Use tools like Google Analytics or other data analytics platforms to measure the effectiveness of your content and see how it is performing over time. This will help you to understand what is resonating with your audience and how you can continue to improve your content marketing strategies.

By applying A/B testing results to your content marketing strategies, you can make data-driven decisions that will help you to boost engagement metrics and drive conversions.

Stay proactive, keep testing, and always strive to deliver the best possible content to your audience.

Increasing Conversions Through A/B Testing Techniques

A/B testing involves creating two versions of a piece of content (A and B) with slight variations, such as different headlines, images, or call-to-action buttons. By testing these variations on a sample of your audience, you can determine which version performs better in terms of conversions.

One of the key benefits of A/B testing is that it allows you to make data-driven decisions about your content. Instead of relying on guesswork or intuition, you can use concrete data to determine which elements of your content are most effective at driving conversions.

To effectively implement A/B testing techniques, you should first identify your goals and key performance indicators (KPIs). Whether you’re looking to increase email sign-ups, downloads, or purchases, having clear goals in mind will help you design experiments that are focused and actionable.

Next, you should carefully design your A/B tests, making sure to test only one variable at a time to accurately determine the impact of each change. This may involve testing different headlines, images, or layouts to see which version resonates most with your audience.

Finally, analyze the results of your A/B tests and use this data to inform future content decisions. By continuously testing and optimizing your content, you can ensure that you’re delivering the most engaging and conversion-focused content to your audience.

By implementing these techniques thoughtfully and consistently, you can increase conversions and boost engagement with your audience.

Conclusion

Recap of Key Concepts in A/B Testing for Content Writers

Let’s recap some key concepts in A/B testing to help you boost engagement and conversions in your content marketing strategies.

A/B testing is a powerful tool that allows you to test different versions of your content to see which one performs better with your audience. By analyzing the results of these tests, you can make data-driven decisions to optimize your content and achieve your marketing goals.

One of the key concepts in A/B testing is defining clear and measurable goals for your content. Before you start testing different versions of your content, it’s important to have a clear understanding of what you want to achieve.

Whether your goal is to increase website traffic, improve conversion rates, or boost engagement, having a specific goal in mind will help you design effective tests and interpret the results accurately.

Another important concept in A/B testing is selecting the right variables to test. When conducting A/B tests, it’s crucial to focus on testing one variable at a time to accurately measure the impact of that change on your content’s performance.

Some common variables to test include headlines, call-to-action buttons, images, and copywriting.

Additionally, it’s essential to ensure that your tests are statistically significant. This means that you need to test your content with a large enough sample size to ensure that the results are reliable and representative of your audience’s behavior.

By following these key concepts in A/B testing, you can make informed decisions to optimize your content and drive better results for your content marketing strategies.

Future Trends in A/B Testing for Content Marketing

It’s important to stay ahead of the curve. As technology continues to evolve, so do the trends in A/B testing.

Here are some future trends to keep an eye on:

1. Personalization: In the future, A/B testing will become even more focused on personalization. Content writers will need to create tailored content for specific audience segments to improve engagement and conversions. A/B testing will help determine which personalized content resonates best with different demographics.

2. Artificial Intelligence: AI will play a larger role in A/B testing for content marketing. Machine learning algorithms can analyze data faster and more accurately than humans, making it easier to identify trends and optimize content strategies. Content writers will need to learn how to work alongside AI tools to create more effective A/B tests.

3. Multichannel Testing: As consumers interact with brands on multiple platforms, A/B testing will need to expand beyond just websites and emails. Content writers will need to test different types of content across various channels, including social media, mobile apps, and even voice assistants. This will require a more integrated approach to A/B testing.

4. Real-Time Testing: In the future, A/B testing will become more immediate and responsive. Content writers will be able to test different versions of content in real-time, allowing them to quickly adjust their strategies based on user behavior. This agile approach will help content writers stay ahead of the competition and adapt to changing trends.

By staying informed of future trends in A/B testing in the ever-evolving world of digital marketing, your business can continue to boost engagement with its audience and conversions.

A/B Testing – FAQs

Here are frequently asked questions about A/B testing:

What is A/B testing?

A/B testing, also known as split testing, is a method of comparing two versions of a webpage or app against each other to determine which one performs better on a specified metric, such as conversion rate, click-through rate, or any other meaningful indicator.

When is A/B testing a good idea?

A/B testing is advantageous when you want to make data-driven decisions about changes to a webpage, app, or marketing strategy. It is particularly useful when you have a clear hypothesis and measurable goals.

When should A/B testing be avoided?

Avoid A/B testing if the goals are unclear, the testable audience is too small to produce statistically significant results, or if the variations are too minor to drive meaningful differences.

How do you set up an A/B test?

Setting up an A/B test involves defining the goal, choosing the variable to test, creating two versions (A and B), splitting your audience randomly, and then measuring the performance of each version against the defined goal.

What are common pitfalls in A/B testing?

Common pitfalls include not running the test long enough to gather sufficient data, making changes to the test mid-way, not ensuring audience segments are properly randomized, or basing decisions on non-statistically significant differences.

How long should you run an A/B test?

The duration of an A/B test should be long enough to achieve statistical significance and account for variations in traffic and behavior. Typically, this can range from a few days to several weeks, depending on the traffic and the metrics being tested.

What metrics should you track during an A/B test?

The choice of metrics depends on the test’s objectives but commonly includes conversion rates, click-through rates, bounce rates, and average session duration. Choose metrics that directly relate to the business impact of the tested variations.

Can A/B testing be used for anything other than websites?

Yes, A/B testing can also be applied to email marketing campaigns, mobile apps, online ads, and more, wherever there are two alternatives to compare with measurable outcomes.

What is the difference between A/B testing and multivariate testing?

A/B testing compares two versions of a single variable, whereas multivariate testing involves changing multiple variables simultaneously to see which combination performs best.

How do you analyze A/B testing results?

Analysis typically involves statistical methods to determine whether the differences in performance between the two versions are significant. Tools like t-tests or analysis of variance (ANOVA) are commonly used for this purpose.

Resources

References

Go here for additional information on A/B testing:

***

Image: Arrows

Augmented Reality (AR) enhances the user’s perception of their environment by integrating virtual elements with physical surroundings, creating an interactive experience that can be accessed via smartphones, tablets, AR glasses, and other devices.

Augmented Reality (AR) enhances the user’s perception of their environment by integrating virtual elements with physical surroundings, creating an interactive experience that can be accessed via smartphones, tablets, AR glasses, and other devices.

Content monetization and content management are intricately connected in our digital ecosystem.

Content monetization and content management are intricately connected in our digital ecosystem. In content marketing, A/B testing, also known as split testing, is a systematic approach that compares two versions of a webpage to determine which one performs better in achieving predefined goals.

In content marketing, A/B testing, also known as split testing, is a systematic approach that compares two versions of a webpage to determine which one performs better in achieving predefined goals.

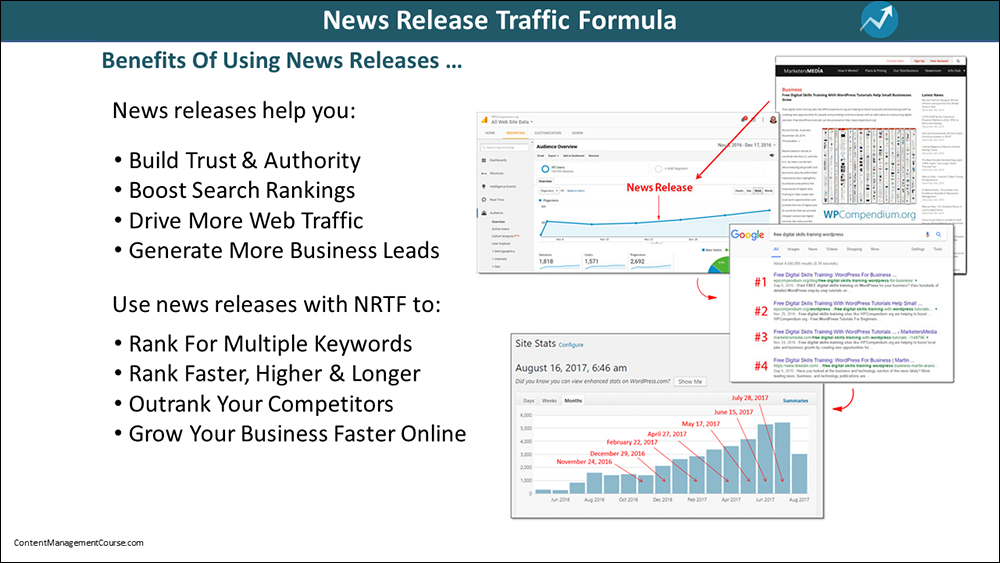

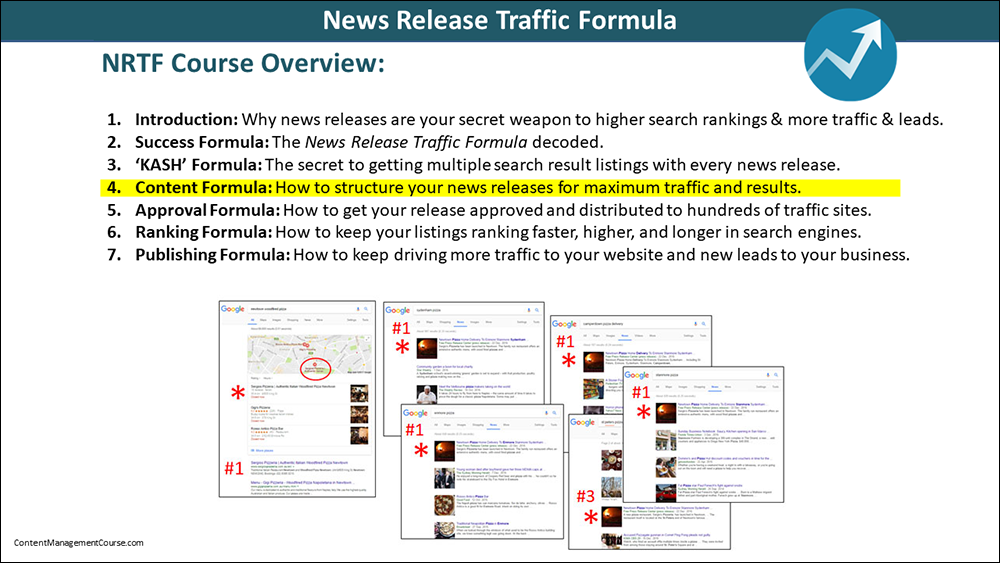

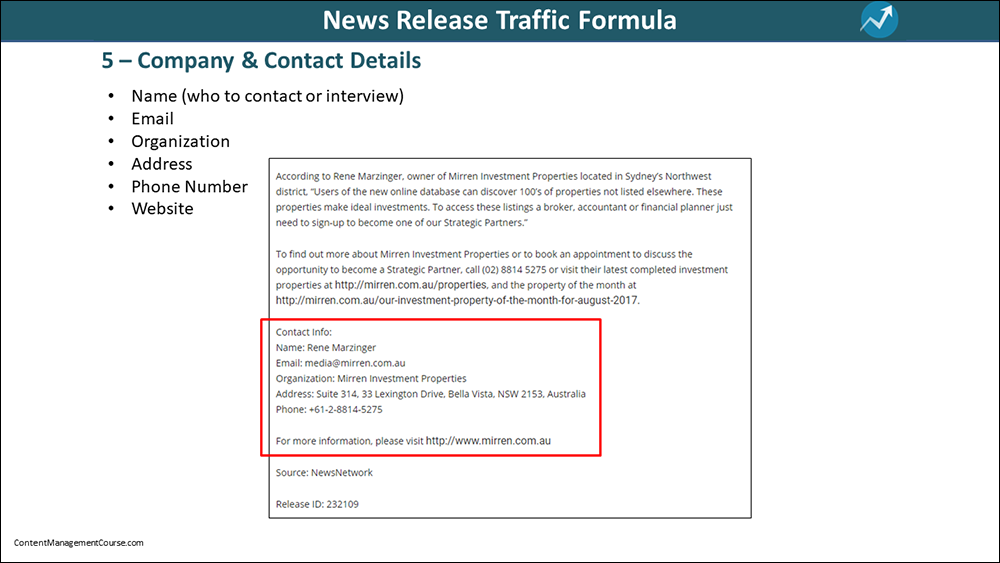

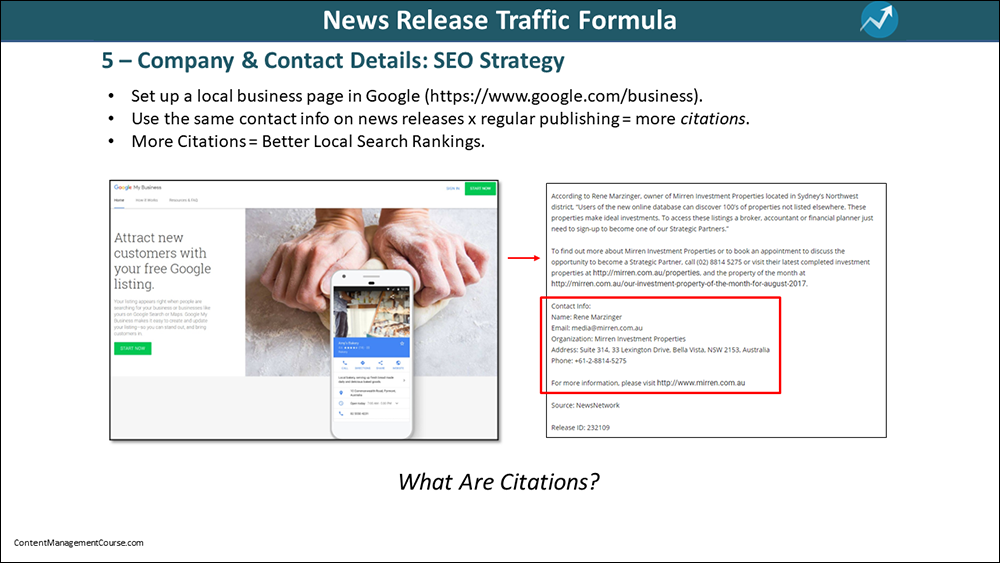

This will also improve your listings on Google maps, boost your brand and authority with Google, and help you outrank your competition.

This will also improve your listings on Google maps, boost your brand and authority with Google, and help you outrank your competition.